Why Your Biggest Wins Don’t Show Up in Revenue

And how to prove the value of experimentation anyway

👋 Welcome back!

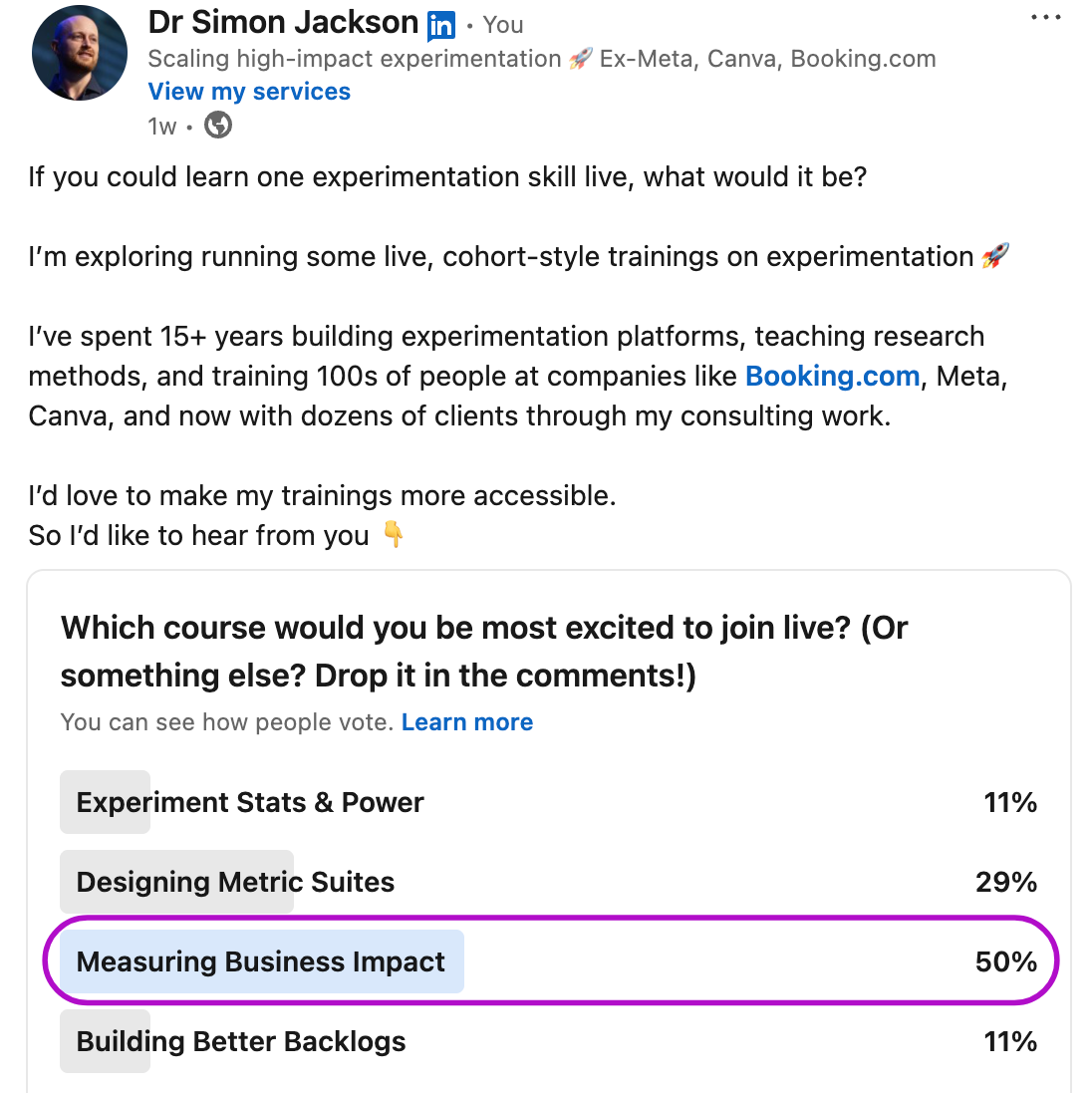

A couple of weeks ago I ran this LinkedIn poll asking what experimentation skill people would most like to learn live. The clear winner? Measuring Business Impact.

It was a clear winner and it makes sense: this is the single most common challenge I see with clients. Teams run good experiments, see strong local wins… and then leadership asks: “So why isn’t revenue moving?”

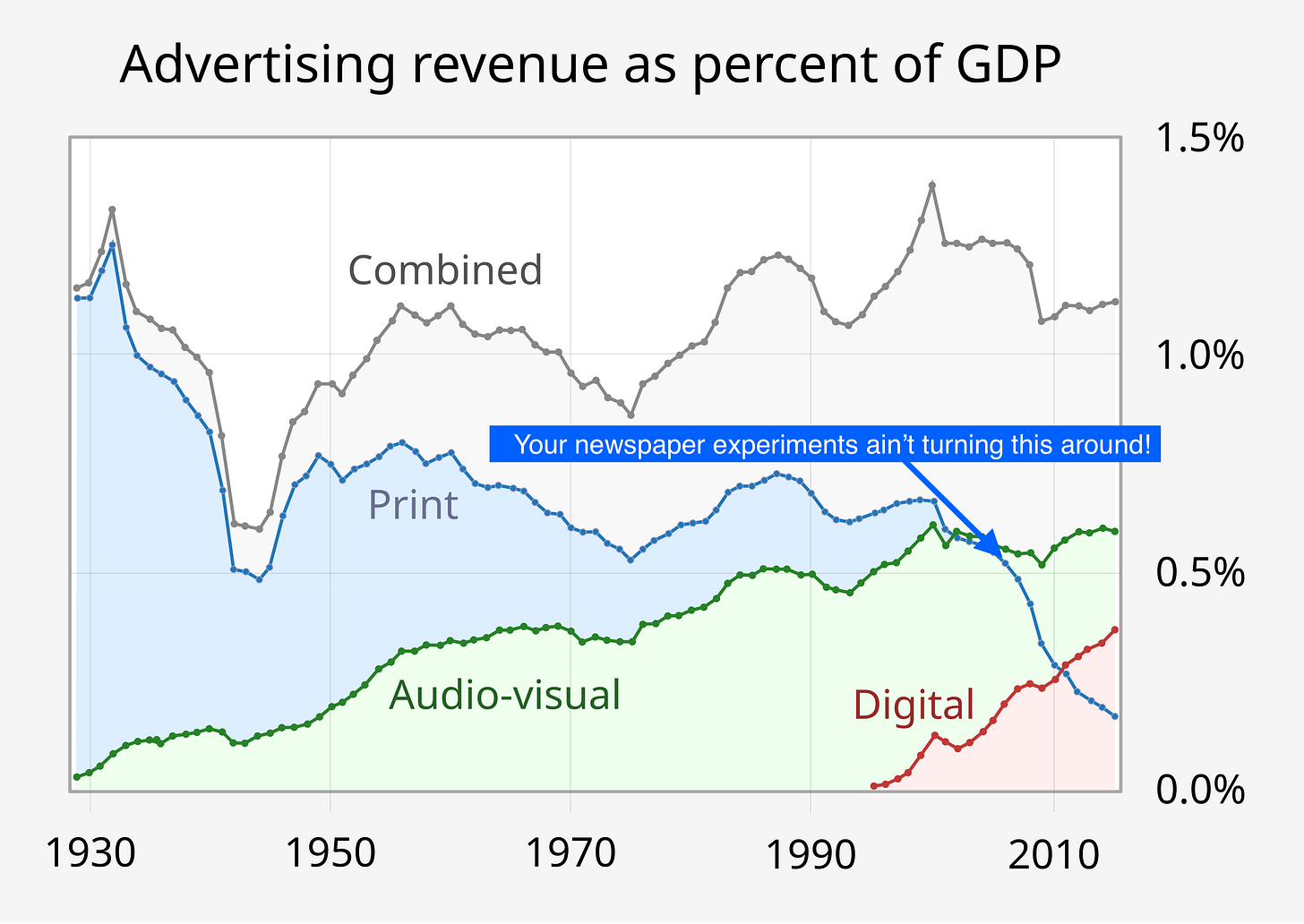

The newspaper problem

Here’s the visceral analogy I like to use: imagine you’re running experiments on a daily newspaper. Remember those? 😉

You might test different layouts, typography, or headlines. You might make the articles more engaging, and improve how many people read past page three. You might even boost retention among loyal readers.

All good things. But will you stop the overall decline of print media? Of course not. That’s a global trend, driven by forces much bigger than your page layout. At best, your work slows the decline.

The same is true for digital products or any business:

Experiments isolate the effect of a specific change.

That’s their superpower. They strip out noise so you can ask: “Did this change cause that effect?”

But that local effect is usually too small to show up in topline KPIs like revenue or churn, especially when dozens of other changes and external forces are at play.

So ironically, the thing that makes experimentation amazing—isolating cause and effect—is also why you can’t expect one test to “move the needle” at the macro level.

Why this disconnect matters

Where teams go wrong is in how they interpret or communicate this gap:

Over-promising: Telling leadership that a test will move revenue, and then struggling when it doesn’t.

Under-valuing: Discarding valid learnings because topline metrics stayed flat.

Frustrating execs: Leaving leaders questioning whether experimentation really matters.

This isn’t a problem with the experiments themselves. It’s a problem with expectations and translation.

The three layers of Value

High-impact teams look at experiment outcomes on three levels:

Local value: The isolated test result. Did this change drives a specific, desirable behaviour or outcome (e.g. onboarding completion, checkout conversions)?

Portfolio value: How the accumulation of changes across many experiments impact overall business performance. No single article saves the newspaper, but 100 small improvements compound.

Strategic value: The learnings that shape long-term decisions (to GO and to NO-GO. Even a “neutral” result can prevent costly mistakes or reframe how a business approaches growth.

If you only look at topline metrics, you’ll miss most of the value experimentation creates.

How to show business impact the right way

Here’s what I advise teams to do instead:

✅ Use proxy metrics

Find leading indicators that link to long-term business goals (activation, engagement, funnel conversion). Show how they connect to longer-term retention or revenue.

✅ Roll up portfolio impact

Communicate results as part of a broader portfolio. “These 10 onboarding tests together lifted activation by 7%, which equates to X new actives a month.”

Side note: there is an art and science to this which I’m teaching live (see here).

✅ Track decision follow-through

The biggest value killer is wins that never get shipped. Measure implementation rates. Value isn’t realised until decisions change.

✅ Translate results into exec language

Frame outcomes as business enablers: “This change unblocked the sales team” or “This reduced risk on a $20m product launch.”

✅ Translate results into exec language

Frame outcomes as business enablers: “This change unblocked the sales team” or “This reduced risk on a $20m product launch.”

✅ Bring experimentation into strategic decisions

Experimentation shouldn’t just optimise buttons or layouts. The real value is when it informs strategic choices, like whether to pivot from print to digital or launch a new pricing model.

Engaging executives (and knowing when not to)

One of the most practical levers for turning experiment results into business impact has nothing to do with metrics or methods. It’s about engagement.

If execs don’t understand the disconnect between local experiment results and topline metrics, you have to invest time in educating them.

Share analogies like the newspaper example.

Walk through concrete examples of how experiments provided evidence that guided the business.

Be clear about both the power and the limits of experimentation.

And, when you can, show them how experimentation can help answer the bigger strategic questions: Should we shift from print to digital? Should we double down on self-serve or sales-led?

These conversations build trust and position experimentation as a strategic asset, not just a tactical testing function.

But here’s the kicker. After doing this across companies, industries, and exec teams, I’ve seen a consistent truth:

👉 Some executives lean in. They believe in being evidence-based, and they’ll take the time to listen, learn, and apply.

👉 Others don’t. It’s their way or the highway. No amount of data or analogies will change their mind.

When you run into the second type, it’s important to recognise the reality: even with enormous effort, you’re unlikely to shift them. At that point, you have to ask yourself a different question:

Do I want to keep fighting this fight here, or would my time and energy be better spent with leaders and teams who are eager to learn, test, and grow?

Because in my experience, the world is full of the first type. And those are the businesses and people that run better experiments, make better decisions, and grow faster.

Back to NAV

If you’re new here, NAV is the framework I use to define and diagnose high-impact experimentation. I introduced it in my first edition, which you can read here.

NAV breaks impact into three levers:

Number: how much you’re testing

Accuracy: how much you can trust the results

Value: whether those results are actually shaping outcomes and strategy

And of the three, Value is the hardest—but most important—to move. Because it’s where experimentation either proves itself as a growth engine, or stalls out as a reporting function.

The newspaper may still be in decline. But if your experiments are improving the product, unblocking teams, and shaping smarter decisions, you’re doing the work that slows the fall and sets you up for the next growth curve.

What’s next

Given how strongly this topic resonated in the poll, I’m running some live workshops on Measuring Business Impact. Specifically, techniques for translating experiment results into business-metric-level measures (like showing how an uplift in onboarding translates into increased revenue by X%).

If that sounds useful, checkout availability at drsimonj.com/impact-cohort 🚀

And in the meantime, I’d love to hear from you:

👉 What’s the biggest challenge your team faces in proving experimentation’s business impact?

Until next time 🙌

— Simon

Helping businesses scale high-impact experimentation

drsimonj.com

Other useful resources by DrSimonJ 🎉

💥 Measuring Business Impact with Experimentation, live cohort course

🧮 The Experimenter’s Power Calculator to quickly plan high-quality experiments

📚The Experimenter’s Dictionary to find practical definitions and resources

🗞️ The Experimenter’s Advantage Newsletter for more of these in your inbox

✅ The NAV Benchmark survey to evaluate the impact of your program